Dedicated servers in Europe vs hyperscalers. Still worth it, or just a different cost curve?

TL;DR

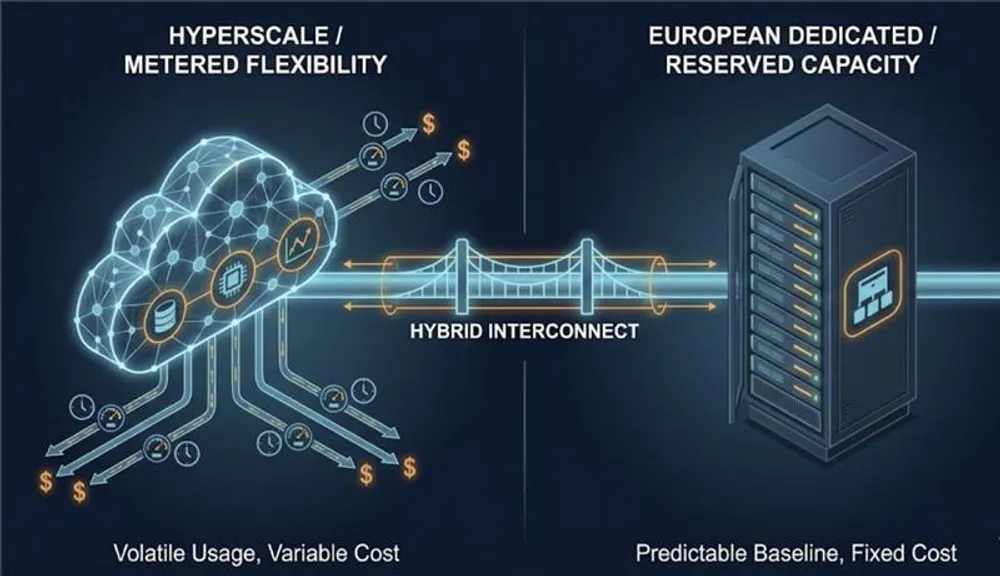

- The real choice is not “cloud vs dedicated”. It is metered costs vs reserved capacity.

- Cloud is great when demand is uncertain, spiky, or you rely on managed services.

- Dedicated servers in Europe are strong when workloads are heavy, sustained, and network-heavy, and you want cloud cost predictability without dozens of meters.

- Performance variance and IP reputation issues are real drivers for moving away from shared VPS and some public cloud ranges.

- Hybrid infrastructure is common because most systems have mixed workload shapes. Done right, it is not a compromise. It is portfolio management.

Cloud vs Dedicated in Europe Is the Wrong Debate. The Real Question Is the Cost Curve.

This debate keeps returning in infrastructure circles because the trade-offs are real.

Not because engineers are nostalgic about hardware. Not because anyone is trying to “beat” hyperscalers. It comes back because cost curves, performance curves, and operational effort behave differently depending on what you run.

So let’s ask the right question.

The marketing version is “cloud vs dedicated.”

The engineering version is this.

Do you want infrastructure costs that move with usage, or infrastructure costs that stay tied to reserved capacity?

Once you frame it that way, the topic becomes less emotional and more useful. People are not arguing about ideology. They are arguing about unit economics, risk, and the day-to-day realities of operating systems.

Cloud often wins on elasticity, speed, and managed services.

Dedicated often wins on predictable performance and predictable cost for sustained workloads.

Most experienced teams end up in the middle. Hybrid infrastructure is not indecision. It is a rational response to how modern systems behave.

This post breaks down the trade-offs calmly and factually. No fear-mongering. No absolutes. No vague marketing language.

Why this question keeps resurfacing

The pattern is predictable.

You start in public cloud because it is fast. There are credits. Provisioning is easy. You can ship quickly without thinking about hardware procurement or capacity planning.

Then one of the common triggers hits.

Trigger 1: the workload stops being bursty

Many products start bursty and later become steady.

At the beginning, usage is irregular. Autoscaling helps. Costs feel fine because you are not paying for much when nothing happens.

Then the workload stabilizes and grows. AI inference runs day and night. Data processing becomes a daily or continuous pipeline. APIs become steady. Indexing never stops. CI runners stay busy.

At that point you are not buying elasticity anymore. You are renting capacity continuously.

That is where the economics shift.

Trigger 2: network costs become the main line item

A lot of teams focus on VM pricing, then get surprised by the network model.

If your product sends data out to users, partners, or other systems, outbound traffic becomes a first-class cost driver. This includes media, datasets, downloads, API payloads, and off-platform backups.

Cloud egress is not an edge case. It is a pricing dimension you must design for.

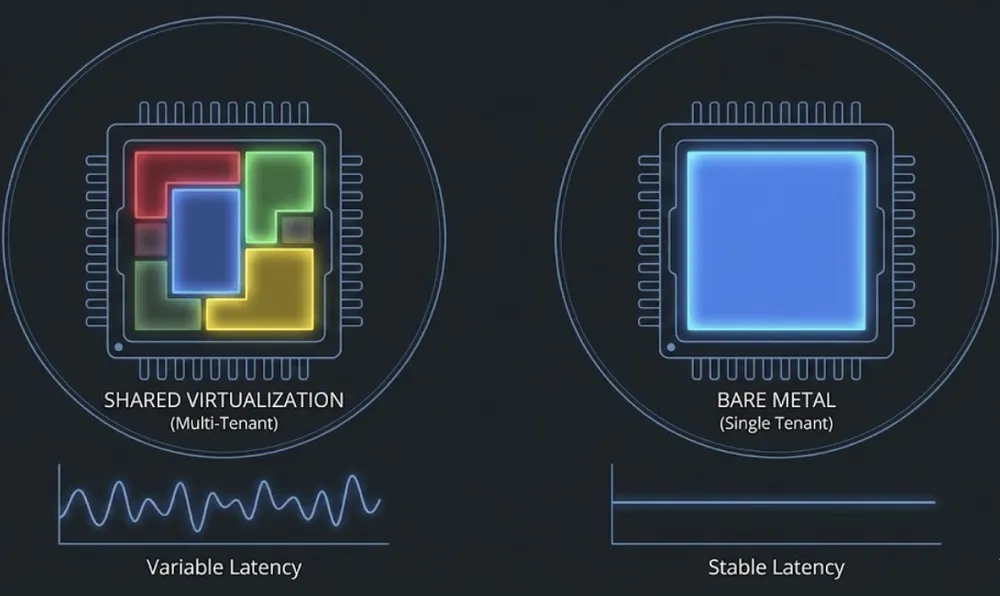

Trigger 3: performance becomes inconsistent

This is usually the most frustrating trigger because it feels random.

For months everything is fine. Then latency drifts. CPU performance varies. Disk throughput hits a ceiling. Network throughput changes. A “fair use” boundary becomes visible.

This is not a failure of engineering in public cloud. Multi-tenant virtualization has trade-offs. Shared storage has trade-offs. Overcommit ratios exist. Contention exists.

Some workloads tolerate variance. Others do not.

Trigger 4: IP reputation creates operational pain

This one is unglamorous, but very real.

Some teams move away from shared VPS and cloud IP ranges because email deliverability becomes painful, or because blanket blocks against certain ranges create reachability issues for enterprise users.

When that happens, the fix is not a better autoscaling policy. The fix can be a different IP reputation profile, which often means a different type of infrastructure.

These triggers explain why the topic stays popular. The decision is rarely philosophical. It is practical.

Why costs behave differently in cloud versus dedicated

The simplest way to understand “bare metal vs cloud” is to look at what you are actually buying.

Public cloud is largely a set of meters.

Dedicated is typically a bundled capacity envelope.

Cloud costs are metered across many dimensions

In public cloud, many things are billed as you consume them:

- Compute by the second, minute, or hour.

- Storage by GB-month.

- IOPS and throughput in some storage tiers.

- Load balancing by hours and usage dimensions.

- Managed databases by instance size, storage, and sometimes I/O.

- Network egress by GB.

- Cross-zone and cross-region traffic by GB.

Even if you discount compute with commitments, your architecture still has multiple meters running in parallel.

So your bill becomes a function of usage, architecture decisions, and mistakes.

Dedicated costs are bundled

With dedicated servers, you typically buy a fixed pool:

- A fixed CPU and RAM envelope.

- A storage profile, often local disks.

- A port speed.

- A bandwidth bundle, or “unmetered” with fair use.

- IPs included or billed per IP.

- Optional add-ons like backup, private networking, or DDoS mitigation.

Your bill becomes a function of what you reserved upfront.

You are not charged for every small event inside the platform. You are paying for capacity.

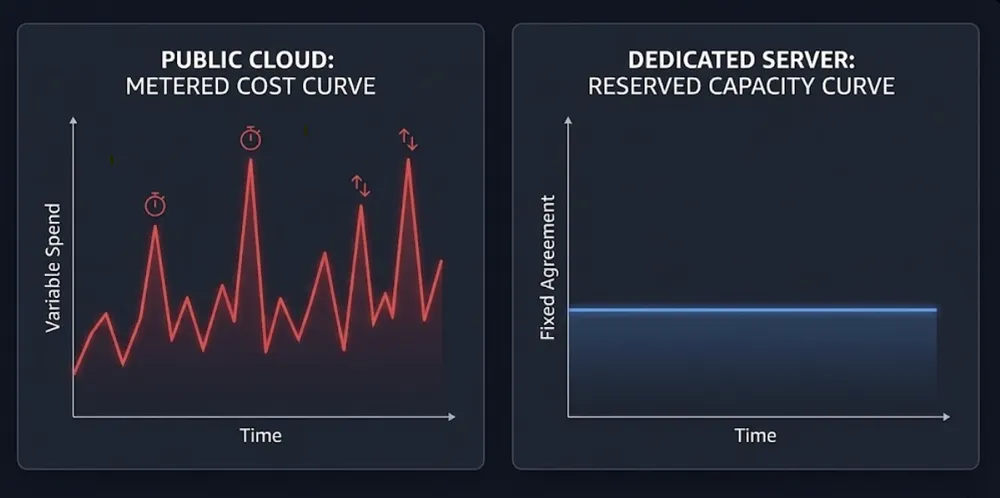

The baseline problem is the heart of it

Cloud is financially efficient when your baseline is low and your peaks are high.

Dedicated becomes financially efficient when your baseline is high and stable.

If you run 10 percent CPU most of the month and spike hard twice a day, cloud often fits well. You can scale up and scale down, then stop paying.

If you run 70 to 90 percent CPU all day, every day, cloud can become expensive because you are renting peak capacity continuously. You are paying margin on every hour of sustained use.

This is why AI workloads show up so often. Many AI workloads are not bursty. They are factories.

Egress is not a footnote

If your product moves data out of the platform, outbound traffic can dwarf compute cost. Cross-zone traffic can also become meaningful when you spread systems for redundancy without modeling network behavior.

This does not mean cloud is always expensive. It means the cost curve can get steep when data movement is part of the product.

Dedicated often has a flatter network cost curve. There can still be limits, and “unmetered” still needs a fair policy. But the bill is often less sensitive to internal architecture details like cross-zone flows.

This is one reason cloud cost predictability can feel hard in practice. The more meters you have, the more ways you can leak spend.

Managed services change the comparison

If you compare “VM price vs server price,” you often miss the point.

Cloud sells time.

Managed databases, queues, caches, identity, and observability reduce operational burden. They can reduce the need for a dedicated platform team.

If you rely heavily on managed services, cloud can be a net win even when raw compute is more expensive. You are buying fewer engineering hours, or avoiding projects you cannot staff.

If you run mostly self-managed services anyway, the managed advantage shrinks and raw compute plus network economics dominate.

Performance consistency and why it becomes decisive

Cloud performance issues are often intermittent. That is why they are hard to accept.

One week everything is fine. Then latency jitter appears. CPU performance varies. Disk throughput changes. Network throughput becomes unpredictable. A neighbor is noisy. Throttling shows up in places you did not model.

That is multi-tenancy and abstraction.

Dedicated servers remove one major variable:

- You own the entire host.

- You avoid host-level noisy neighbors.

- You can tune CPU pinning, NUMA, hugepages, and kernel behavior if needed.

- You often get more predictable throughput.

This is why dedicated keeps showing up for high performance compute and high throughput networking.

The trade-off is not optional.

Dedicated does not magically give you resilience. If you need multi-zone redundancy, you design it. If you need instant capacity, you keep spare capacity or accept slower provisioning.

So the real trade is control and stability versus elasticity and convenience.

IP reputation and email deliverability

This topic is easy to dismiss until it becomes your incident.

Some teams move away from shared VPS and cloud ranges because email deliverability becomes a constant fight. Blanket range blocks happen. Aggressive blocklists exist. Legitimate email ends up in spam. Some sites become unreachable for enterprises using strict filters.

Dedicated servers can help because you often get a cleaner IP history or a more controllable range.

But it is not a silver bullet.

Deliverability today depends heavily on SPF, DKIM, DMARC, sending patterns, and reputation. A clean IP helps, but it does not replace expertise. For many businesses, outsourcing email remains rational.

The useful lesson is broader.

Infrastructure decisions are sometimes driven by edge constraints, not CPU price. IP reputation is one of those constraints.

DDoS protection is not exclusive to cloud

There is a common assumption that DDoS mitigation is “a public cloud thing.” It is not.

Hyperscalers do have massive networks and mature capabilities. That can be a real advantage depending on your threat model.

But dedicated and European infrastructure providers can also offer DDoS mitigation, often integrated into their own networks.

The difference is usually not whether mitigation exists. The difference is coverage, scale, integration effort, response time, and pricing.

If DDoS is material to your business, treat it as a requirement and ask concrete questions about coverage and behavior during attacks.

Virtualization on bare metal is a real middle ground

Another outdated idea is that dedicated means manual provisioning and no flexibility.

You can run virtualization or private cloud stacks on dedicated hardware:

- VMware for mature virtualization.

- OpenStack for private cloud primitives.

- OpenNebula for a simpler VM cloud model.

- Kubernetes on bare metal for container workloads with substrate control.

With proper provisioning and automation, bare metal can feel cloud-like in day-to-day workflows.

The honest constraint is not technology. It is scope.

Building a good internal platform requires skill, time, and discipline. For a small team, the human cost can erase savings. For a capable team with stable workloads, it can be a competitive advantage.

When dedicated servers in Europe make sense

Dedicated is not “always cheaper.”

Dedicated is often flatter.

Dedicated servers in Europe make sense when your workload has characteristics like these.

Sustained, heavy compute

AI inference that runs constantly.

CPU-bound processing.

Steady high-QPS APIs.

Encoding, indexing, pipelines.

If utilization is consistently high, dedicated economics tend to improve because you pay for a box you actually use.

High or predictable outbound traffic

If your product ships data, cloud egress can dominate cost. Dedicated often offers more stable network pricing, which improves forecasting.

Performance sensitivity

If you care about jitter, stable throughput, consistent disk I/O, or high packet rates, dedicated reduces host-level variability.

Control over the substrate

If you need kernel tuning, CPU pinning, NUMA awareness, hugepages, or GPU pass-through, dedicated gives you options that are harder to guarantee in multi-tenant environments.

Fewer billing surprises

Metered systems punish blind spots. Dedicated simplifies forecasting because capacity is fixed.

It does not remove operational responsibility. It reduces the number of meters that can surprise you.

When dedicated servers in Europe do not make sense

Dedicated is the wrong tool in plenty of cases.

Spiky workloads and uncertain demand

If demand is uncertain, paying for idle capacity is waste. Cloud elasticity is often the right default.

Heavy reliance on managed services

If your architecture depends heavily on managed databases, queues, serverless, or provider-native analytics, moving away can become a rewrite. Sometimes justified. Often not.

Global distribution requirements

If you need multi-region presence quickly, hyperscalers have structural advantages. You can still build globally on European infrastructure, but it requires more design effort.

Small teams without platform capacity

Dedicated shifts responsibility onto you. Patching, monitoring, backups, HA design, and incident response do not disappear.

If you cannot staff it, cloud is not laziness. It is focus.

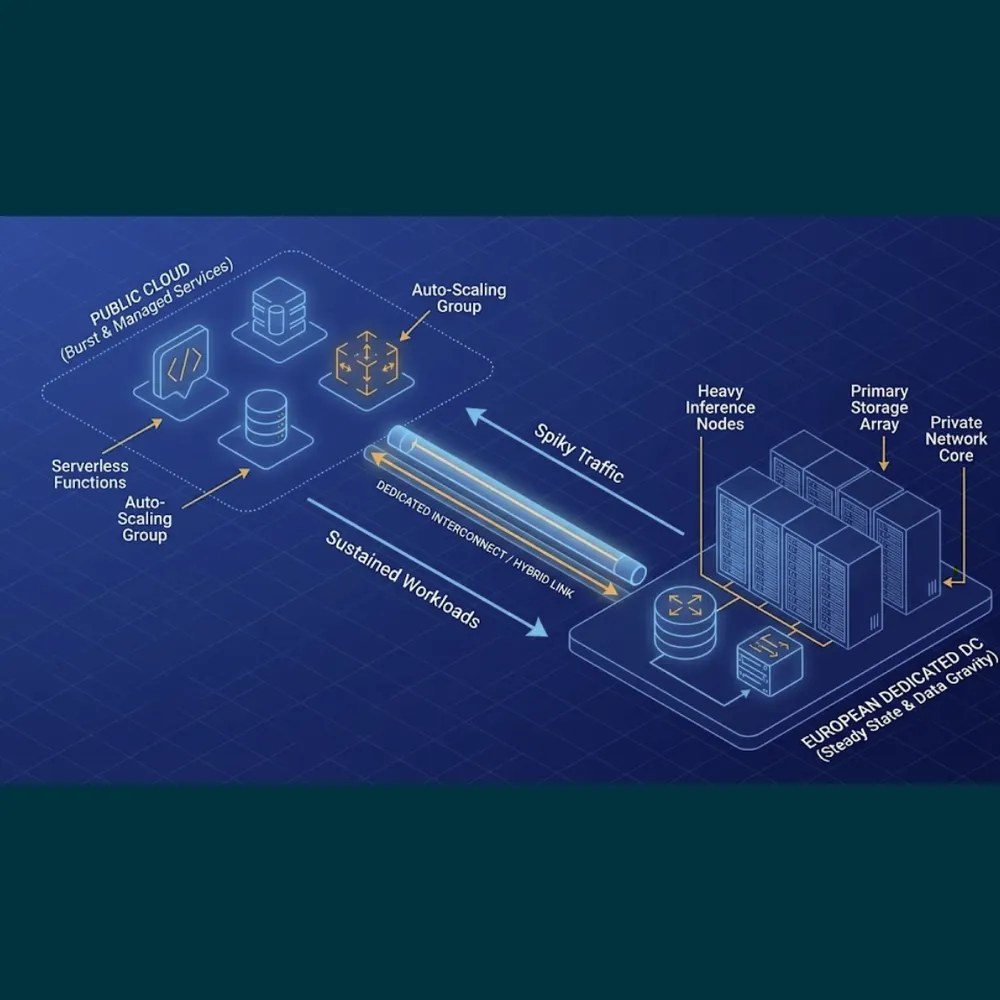

Hybrid infrastructure is common because it matches reality

Most real systems are not one workload shape. You usually have a mix:

- A steady baseline.

- Bursts from batch, launches, or seasonality.

- Sticky state and portable stateless services.

- Constraints around where data lives.

- Constraints around latency and geography.

Hybrid infrastructure lets you place each component where it fits best.

That is why “it depends” is often the most correct answer.

Pattern 1: baseline on dedicated, burst in cloud

Run steady compute on dedicated. Use cloud for overflow, experiments, and short-lived workloads.

The risk is bad data flow design. If your burst jobs constantly pull data out of cloud or push data back in, network cost can erase the benefit.

Pattern 2: state on dedicated, stateless in cloud

Keep databases and data-heavy systems on dedicated for predictability. Run stateless services in cloud to scale quickly.

This can work well if you model latency and traffic properly.

Pattern 3: managed control plane in cloud, workers on dedicated

Use cloud-managed services where they save time. Run worker pools on dedicated where sustained compute is flatter.

This is common in processing and AI pipelines. The risk is ignoring network cost and latency.

Pattern 4: dedicated primary, cloud for monitoring and DR staging

Run production on dedicated. Use small cloud instances for monitoring, external checks, and disaster recovery staging.

This reduces blast radius. DR still requires discipline. It is not real until you rehearse it.

Hybrid is not free. It gives you choice and more surfaces to manage. Done intentionally, it is often the most defensible architecture for mixed workloads.

Data sovereignty and EU ownership, without slogans

Treat data sovereignty as a set of requirements, not a debate.

It usually comes down to four practical questions:

1. Data residency. Where is data stored and processed?

2. Operational control. Who operates infrastructure and support, and where?

3. Legal jurisdiction. Which laws can compel access?

4. Contractual clarity. What do the terms say about access and auditability?

Dedicated servers in Europe can help with residency and operational control. European providers can as well. Hyperscalers also offer EU regions, and for many workloads that is sufficient.

Ownership and jurisdiction matter for some organizations. Not for all. Treat it as a requirement only when it is part of your risk model or customer demand.

A decision framework you can defend

Stop arguing “cloud vs dedicated” and start choosing based on constraints.

Choose cloud first when

- Demand is uncertain or spiky.

- You need managed services to move fast.

- You want rapid scaling with low operational overhead.

- Variable monthly spend is acceptable for speed.

Choose dedicated first when

- The workload is heavy and sustained.

- You need stable performance and throughput.

- Egress and network are major cost drivers.

- You want simpler forecasting and fewer meters.

- You can operate it well.

Choose hybrid infrastructure when

- Your system has both steady and bursty parts.

- You want managed services without paying hyperscaler rates for all compute.

- Data gravity and compute demand live in different places.

- You want freedom of choice over time.

Where Worldstream fits

Worldstream stays deliberately focused.

We provide infrastructure only. Bare metal, private cloud, and scalable cloud infrastructure.

We operate our own data centers and network in the Netherlands. We build with in-house engineering. The goal is predictable agreements, predictable pricing, freedom of choice, and no lock-in by design.

That is Solid IT. No Surprises.

This is not an argument against hyperscalers. They solve real problems, especially when elasticity and managed services are the priority.

It is an argument for choosing infrastructure that matches workload reality, and keeping options open.

Conclusion: stop choosing teams. Choose constraints.

So, are dedicated servers in Europe still worth it?

Yes, when the workload is sustained, performance-sensitive, network-heavy, or forecasting needs to be boring and reliable.

No, when your value comes from elasticity, managed services, and rapid scaling, and when your team cannot afford platform work.

Most of the time, the best answer is hybrid infrastructure, designed intentionally.

Not as a messy compromise.

As a way to match each workload to the right cost model, operational burden, and risk profile.

Freedom of choice beats dogma. Every time.

FAQs

Sometimes, especially for sustained workloads with high utilization or significant outbound traffic. Cloud can be cheaper for spiky demand and when managed services reduce operational cost. The cost curve depends on baseline usage and data movement.